Track and field races usually have fully automatic timing (FAT) systems which consist of hardware and software that are used to accurately record the times that each runner finished at. Most systems on the market are expensive because they use specialized hardware. Our goal in this project is to create an FAT system that can operate using video from a cellphone. The output of a normal FAT system is an image where the horizontal axis represents time and each column of pixels is taken directly across the finish line. In addition to producing these sort of images, we would like our system to be able to identify the hip number on each finisher so that a list of finishers and their times can be produced automatically.

Our pipeline can be divided into two sections, runner identification, and hip number localization and classification.

Our inputs were stationary videos of a finish line with runners occasionally passing through the frame.

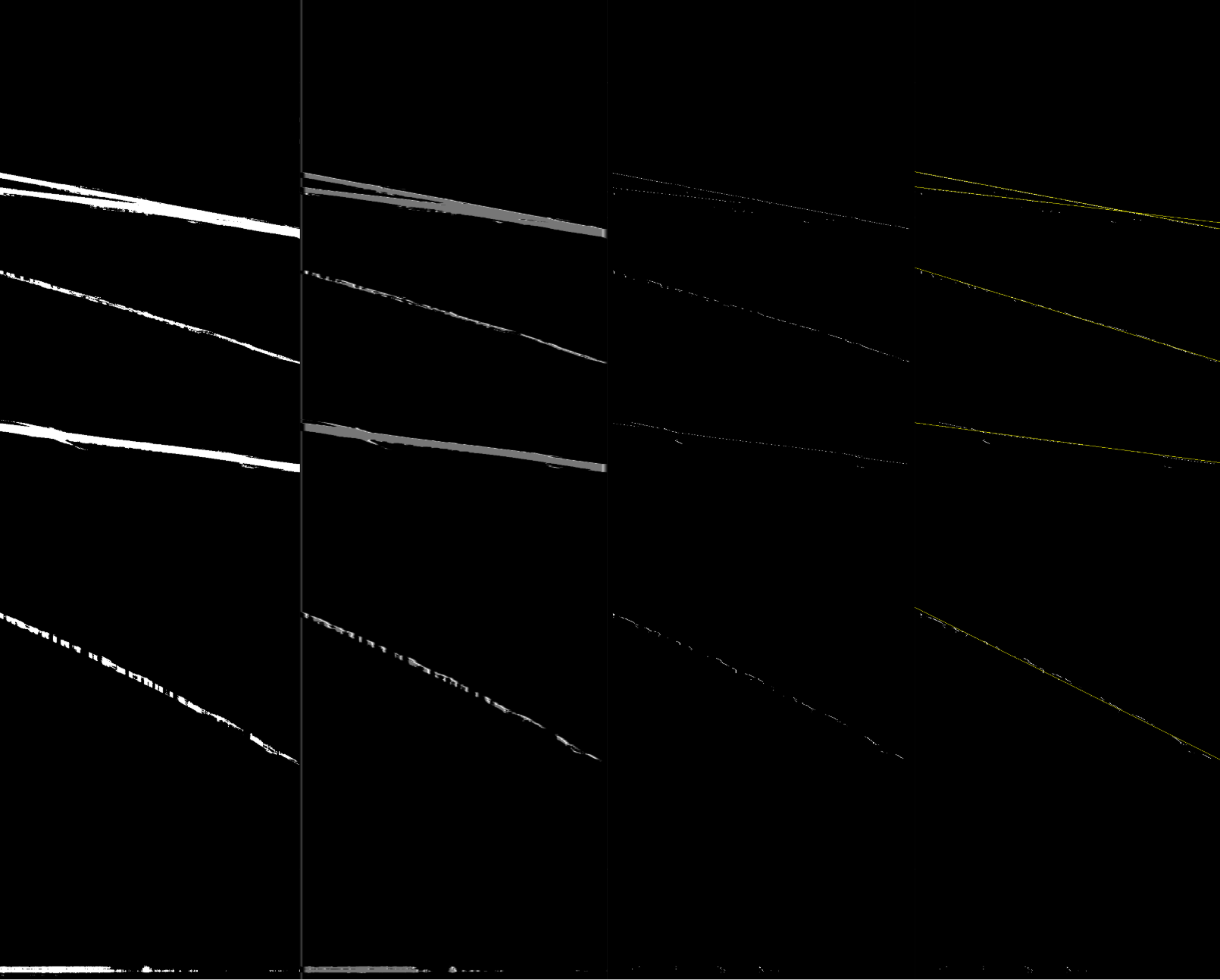

To start, a background frame (usually the first) was subtracted from each frame, so that the modified frames were mostly black except for the runners.

Each frame was then convolved with an hx1 filter so that the a wx1 image was left.

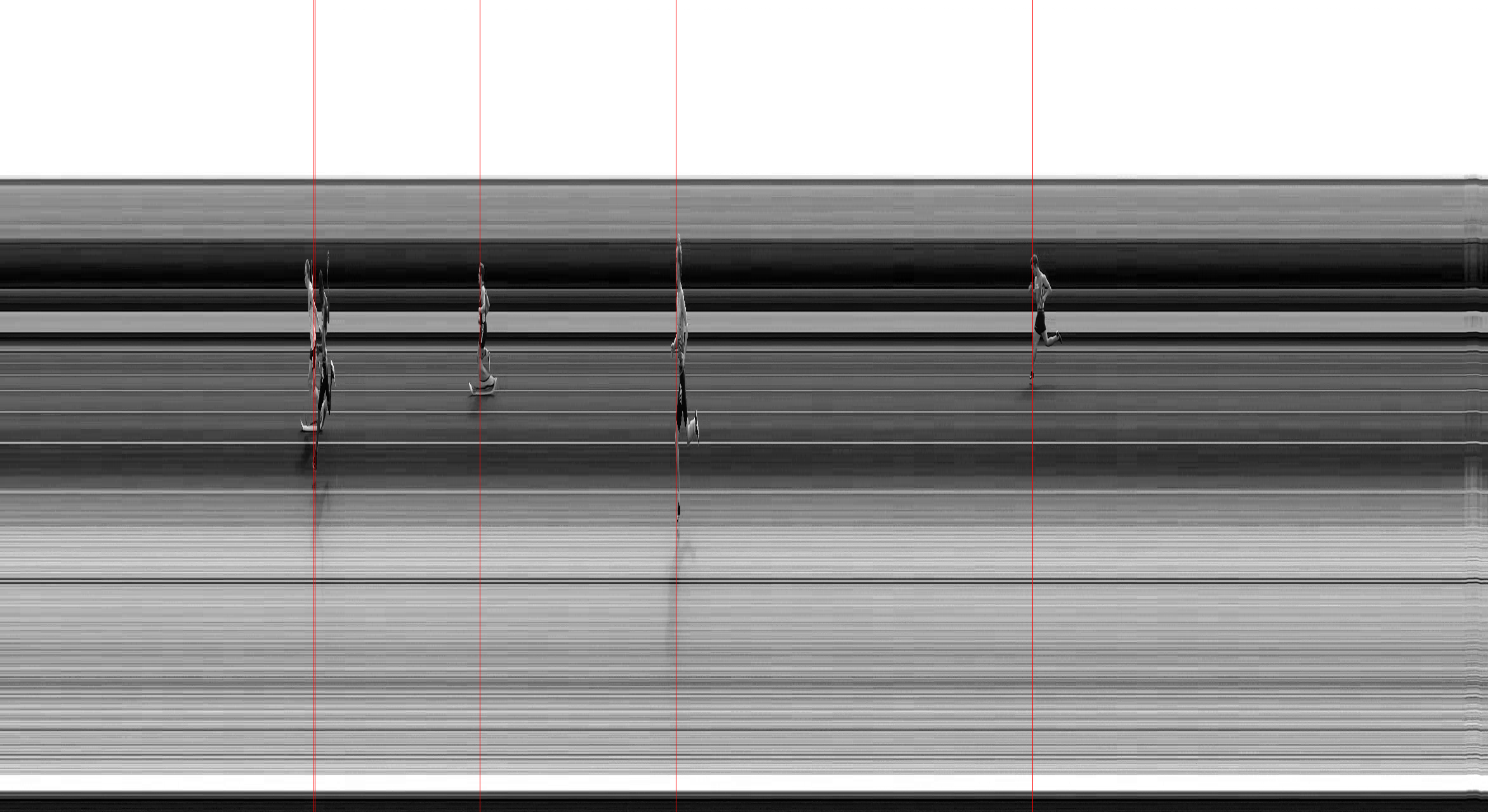

These wx1 images were stacked for each frame, and RANSAC was used to fit through all the pixels above a certain threshold.

Each line represented a single runner, and the intersection of these lines with the finish line gave us the finish time of the runners.

After discovering the finishing time of each runner, we could extract the frame that shows them finishing. This frame could then be convolved with an hx1 filter again, as well as a wx1 filter. By seeing where the outputs of these convolutions were greater than some threshold, we could get a bounding box for roughly where the runner appears in the frame. The window within the box was then shrunken slightly to focus on the hip region. Then, a CNN trained to recognize hip numbers was scanned across the window, and the location with the highest response was taken to be the hip number. The hip number was cleaned slightly and then passed into an MNIST trained classifier to get the digit of the hip number.

Our system did fairly well at identifying the point at which each runner crossed the finish line, although there were usually some false positives. The automatic hip number reading was not quite as accurate. The first network did a decent job of picking the actual hip number out of the window it was given, but the MNIST trained classifier did not predict the correct digit with great accuracy. It would have been better if we had trained on a dataset of actual hip numbers, rather than hand draw digits, but unfortunately we did not have access to a dataset with many unique hip numbers. Overall, we are able to detect a finish with about 80% accuracy and report the hip numbers with about 30-40% accuracy.